AI-Blog-Posts

Taming the Monolith: How to Make AI Coding Tools Work in Complex Legacy Codebases

Strategies for overcoming context limitations and maximizing AI effectiveness in established projects

⏱️ Reading Time: 11 minutes

If you’ve tried AI coding tools on a large, established codebase and walked away frustrated, you’re not alone. While AI coding tools can produce miraculous results in greenfield projects, they often struggle dramatically when faced with the complexity, constraints, and accumulated technical debt of monolithic applications.

This disparity creates a dangerous pattern: developers try AI tools on existing projects, experience poor results, and conclude that AI coding isn’t ready for “real work.” Meanwhile, teams working on new projects rave about AI’s transformative capabilities. The truth is more nuanced—AI tools can be incredibly valuable in monolithic environments, but they require different strategies and realistic expectations.

Here’s what every development team needs to know about making AI coding tools effective in complex, established projects.

Understanding the Monolithic Challenge

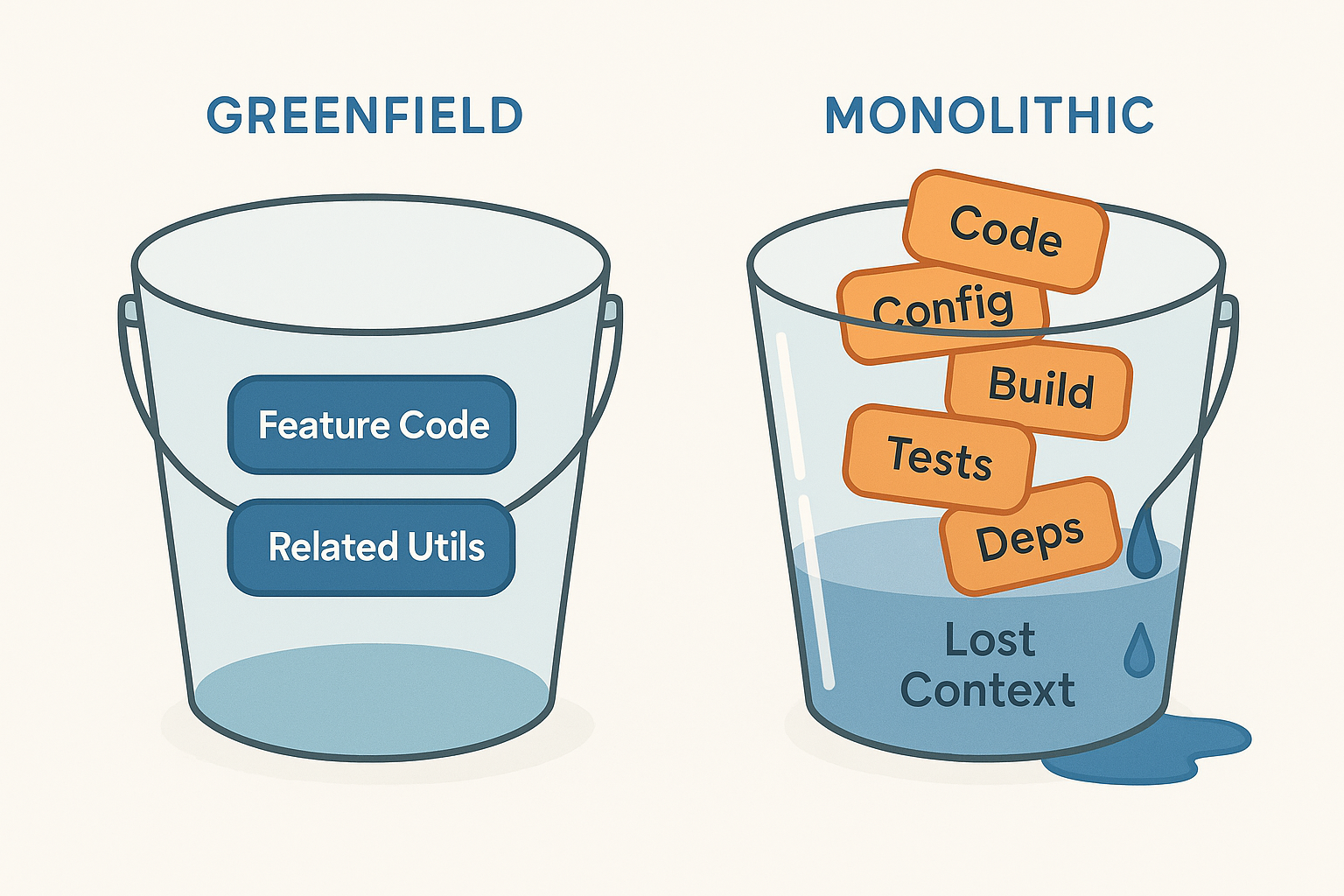

The Context Bucket Problem

To understand why AI tools struggle with large codebases, imagine the AI’s context as a bucket that can only hold a limited amount of information. In a greenfield project, this bucket contains only the relevant code you’re currently working on. But in a monolithic codebase, that bucket fills up quickly as the AI tries to ingest vast amounts of interconnected code, configuration files, build scripts, and project history.

Once the bucket is full, the AI faces an impossible choice: it can’t consume new information without discarding something it already knows. For large tasks that span across the entire codebase, this limitation makes comprehensive understanding nearly impossible.

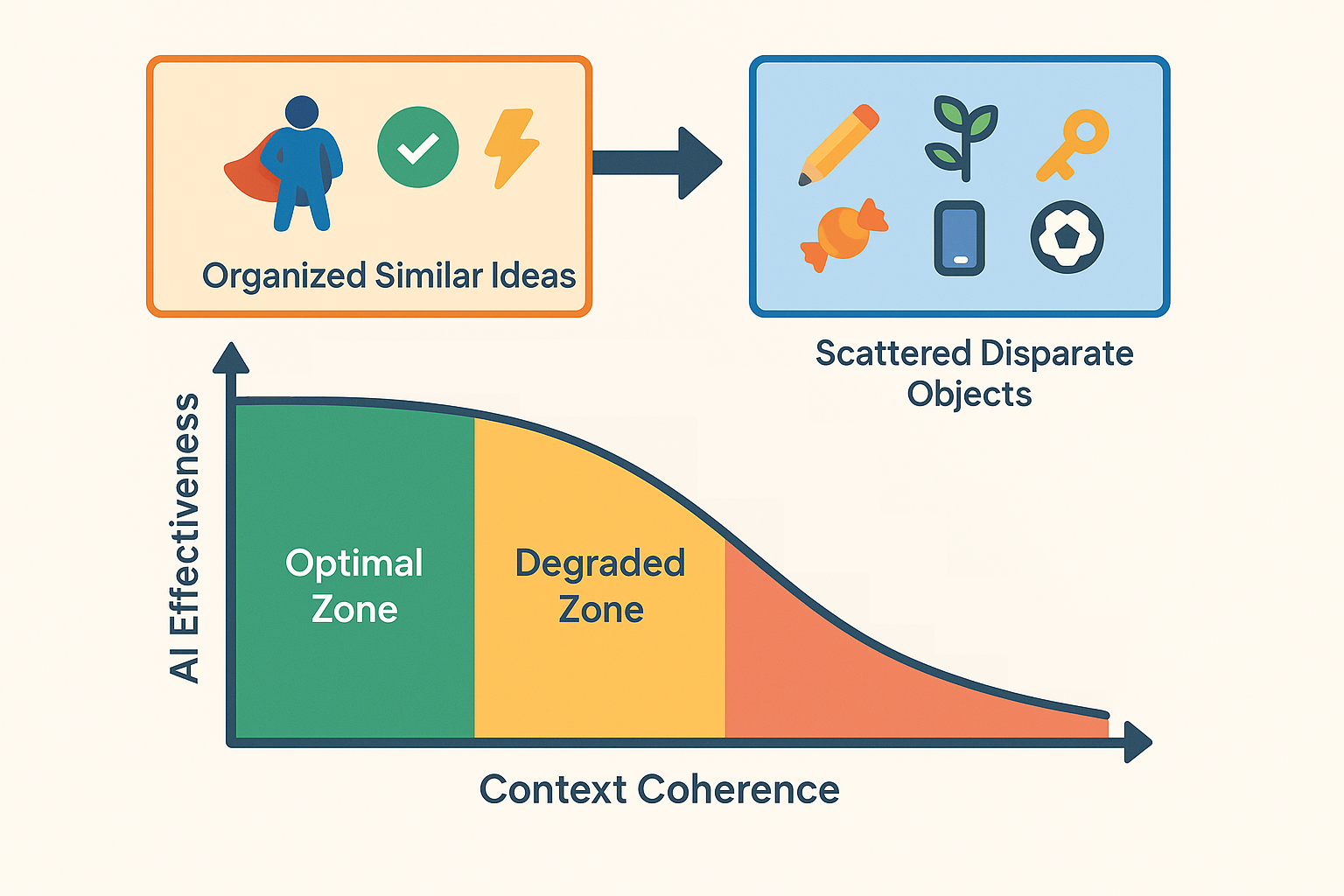

The Effective Context Dilemma

Beyond the quantity problem lies a quality issue we’ll refer to as “effective context.” Even when the AI’s context bucket isn’t completely full, the effectiveness of that context degrades as it becomes more diverse and scattered.

Think of it this way: if you throw Iron Man, Hulk, and Thor figurines into a bucket, someone can effectively classify the contents as “Marvel action figures.” But if you throw in a pencil, a pinecone, and a pack of gum, no one can meaningfully categorize what’s in the bucket.

AI context works similarly. It’s much more effective when knowledge grows within a confined category rather than trying to consume disparate parts of a large codebase. The more widespread and varied the knowledge becomes, the less effectively the AI can reason about any individual piece.

The Pre-existing Standards Problem

Monolithic codebases come with established patterns that greenfield projects don’t have to worry about:

- Build Requirements: Specific compilation steps, dependency management, and deployment processes

- Testing Standards: Particular testing frameworks, coverage requirements, and testing patterns

- Code Style: Established naming conventions, architectural patterns, and formatting rules

In greenfield projects, AI can choose these standards and typically sticks to them well. But getting AI to conform to pre-existing requirements is significantly more challenging.

Warning Signs: When Your AI Context is Breaking Down

Recognizing when AI tools are struggling with context limitations is crucial for knowing when to change strategies. Watch for these telltale signs:

Technical Indicators

- Incorrect object type usage: Using wrong classes or interfaces that don’t match your codebase

- Non-existing object types: References to classes, methods, or variables that don’t exist in your project

- File corruption: Code appearing in wrong locations, misaligned formatting, or random code fragments

- Incomplete implementations: Moving on to write tests before finishing the actual implementation

Behavioral Indicators

- Excessive “thinking”: Long pauses or verbose reasoning that suggests the AI is struggling to process information

- Repetitive tasks: Getting stuck in loops or repeatedly attempting the same failed approach

- Loss of focus: Forgetting previously established requirements or instruction files

These symptoms indicate that your AI’s context has become too muddy to be effective, and it’s time to change your approach.

When AI Struggles Signal Code Quality Issues

If AI consistently underperforms in specific areas of your codebase, this often indicates deeper technical debt or structural problems. Rather than fighting through these challenges, consider them diagnostic feedback about code that could benefit from refactoring.

AI performance patterns serve as an excellent early warning system for code that needs attention. When you find areas where AI consistently produces poor results, struggles to understand requirements, or generates buggy code, these are often the same areas that cause problems for human developers. AI as Your Code Quality Detective (12-minute read) provides a systematic approach to interpreting these signals and using AI performance as a diagnostic tool for identifying refactoring opportunities and technical debt hotspots.

Strategic Solutions for Monolithic Environments

1. Master the Art of Instruction Files

The fundamental building block for working with AI in established codebases is creating comprehensive instruction and rule files.

For Build and Test Requirements: Create executable scripts for common tasks and point your instruction files to these scripts. This ensures the AI follows your exact procedures rather than improvising.

For Style Requirements: Use industry-standard nomenclature that the AI will recognize, and provide concrete examples. AI tools excel at pattern matching, so showing examples is often more effective than describing requirements.

Pro Tip: Don’t write instruction files yourself—have an AI agent generate them. Describe what you want accomplished, and let the AI create the text in language it naturally understands.

2. Implement Smart Context Management

The Implementation Plan Approach: For large tasks, always start by having the AI create a markdown file with a detailed implementation plan. Review this plan carefully—if it’s way off course or seems impossible, you know the task won’t fit in the context bucket.

Strategic planning is critical in monolithic environments where context limitations require careful task breakdown. Planning-First AI Methodology (19-minute read) provides systematic approaches for managing complex tasks within AI context constraints.

Two-Stage Problem Breakdown: When tasks are too large:

- Manual breakdown: Break the problem into smaller chunks yourself based on your understanding of the codebase

- AI-assisted breakdown: Start with fresh context, explain the problem at a high level, and ask the AI to create high-level checkpoints

Enterprise coordination: Multi-Phase Planning Documentation Bundle (17-minute read) handles complex project coordination across multiple teams and systems.

Context Cleanliness: Stay vigilant about “muddying your context.” Think of your AI’s context like a bucket of water—the clearer the water, the more effective the AI. When you feel like you’re crossing into territory that could be its own task, start fresh context.

3. Recognize When to Push Through vs. Start Over

When to Push Through: If you’re close to completion and dealing with minor context degradation, it might be worth fighting through the AI’s mistakes to finish the task.

When to Start Over: If you’re early in the process and seeing warning signs, completely back out and rethink the problem with fresh context.

The Difficult Middle Ground: When you’re somewhere between early and late in the process, starting fresh context is usually the safer choice. Trying to clean mud out of water is nearly impossible.

4. Work Within Natural AI Boundaries

Certain practices naturally align with AI strengths even in complex environments:

Layer Limitation: Avoid working across more than four layers of your software architecture at once. Like a junior developer, AI can get confused about where it is and where it’s been when jumping between too many abstraction levels.

Focused Scope: Break work into chunks that allow the AI to maintain effective context within a single domain or feature area.

Pattern-Rich Tasks: AI excels at tasks where it can identify and follow clear patterns, even in complex codebases.

Hidden Opportunities in Monolithic Environments

Even when AI struggles with large architectural tasks, numerous opportunities exist for valuable assistance:

Investigative Tooling

One of the most underutilized applications of AI in complex codebases is creating investigative tools. When debugging or analyzing problems, you often wish you had a specific script or utility but don’t want to spend hours building it manually.

Instead of pushing through without the tool, have the AI write the script for you. AI can create complex investigative tools in minutes that would take you hours to implement manually. These “tangential” requests are easy to miss because they’re not your primary task, but they can dramatically accelerate problem-solving.

Remedial Improvements

AI excels at adding remedial code that makes future work easier:

- Enhanced Logging: AI can add comprehensive logging throughout your codebase, which it can then consume and analyze in subsequent tasks

- Documentation Generation: Creating or updating documentation for complex code sections. For systematic knowledge capture from team members who understand legacy systems, The Interview Method (10-minute read) provides a structured approach to extracting and documenting complex domain knowledge that can then be integrated into your codebase documentation.

- Test Coverage: Adding unit tests for existing functionality

- Code Comments: Improving code readability with meaningful comments

Unconventional Applications

Some of the most valuable AI assistance comes from thinking outside traditional boundaries:

- Data Analysis: Having AI analyze log files or database outputs to identify patterns

- Configuration Management: Generating or updating complex configuration files

- Migration Scripts: Creating one-time scripts for data transformation or system migration

- Monitoring and Alerting: Building custom monitoring solutions for specific codebase needs

Best Practices for Teams Getting Started

Start with Realistic Expectations

Don’t expect AI to tackle your monolithic codebase the same way it handles greenfield projects. Success comes from finding the right-sized problems and working within AI’s natural strengths.

Understanding the broader organizational context for AI adoption helps set appropriate expectations and timelines for monolithic integration. The AI Coding Revolution: Your Team’s Survival Guide (15-minute read) provides essential strategic framework for managing the transformation process, addressing stakeholder concerns, and building the business case for investing in the infrastructure and training needed for effective AI implementation in complex legacy environments.

Experiment with Repetition

Try solving the same problem multiple ways, starting fresh each time. This will take significant time initially, but you’ll develop invaluable intuition about how to interact with AI effectively in your specific codebase context.

Develop an AI-First Mindset

Constantly look for opportunities to use AI assistance. The more you use these tools, the better you’ll become at recognizing appropriate use cases and the more value you’ll extract from them.

Monolithic AI Strategy Framework

Phase 1: Infrastructure Setup

- Create comprehensive instruction files for coding standards

- Develop executable scripts for build and test procedures

- Document common project patterns with examples

- Establish context management workflows

Phase 2: Gradual Integration

- Start with isolated utility functions or helper methods

- Progress to single-feature implementations in well-defined areas

- Develop expertise with instruction file optimization

- Build team knowledge of effective context management

Phase 3: Complex Implementation

- Tackle multi-component features with proven context strategies

- Implement cross-cutting concerns using multi-context workflows

- Use AI diagnostics to identify refactoring opportunities

- Leverage AI for systematic code quality improvements

Once you’ve implemented these monolithic strategies, measuring their effectiveness becomes crucial for demonstrating ROI and optimizing your approach. Measuring AI Impact in Development Teams (8-minute read) provides concrete metrics and tracking methodologies specifically designed for complex environments where traditional productivity measurements may not capture the full value of AI assistance in legacy codebases.

The Long-Term Payoff

While working with AI in monolithic codebases requires more strategy and patience than greenfield development, the investment pays substantial dividends. Teams that develop expertise in these challenging environments often find they can:

- Accelerate Maintenance Tasks: Routine updates, bug fixes, and small feature additions become much faster

- Improve Code Quality: Systematic improvements to logging, testing, and documentation become feasible

- Reduce Technical Debt: AI can help tackle refactoring tasks that were previously too time-consuming to justify

- Transfer Knowledge: AI can help document and explain complex legacy code, making it more maintainable

Conclusion: Persistence Pays

The key to success with AI coding tools in monolithic environments isn’t avoiding the challenges—it’s understanding them and developing strategies to work within the constraints. While these tools may never be as effortlessly productive in complex legacy codebases as they are in greenfield projects, they can still provide tremendous value when used thoughtfully.

The developers and teams that invest in learning these nuanced skills will find themselves with capabilities that seemed impossible just months ago. Don’t let early frustrations with monolithic codebase challenges prevent you from realizing the transformative potential of AI-assisted development. Instead, treat these challenges as learning opportunities that will make you more effective with AI tools across all types of projects.

The monolith may be complex, but with the right strategies, it doesn’t have to be insurmountable.

Related Posts

Essential Reading:

- The AI Coding Revolution: Team Survival Guide - Strategic foundation for legacy system transformation

- Planning-First AI Methodology Foundation - Essential preparation for complex legacy work

- AI Code Quality Detective: Hunting Technical Debt - Identify and prioritize legacy improvement opportunities

Dive Deeper:

- Multi-Phase Planning Documentation Bundles - Coordinate complex legacy transformations

- AI Interview Method for Scattered Thoughts - Extract and organize legacy system knowledge