AI-Blog-Posts

AI as Your Code Quality Detective: Using AI Struggles to Identify Technical Debt

How AI performance degradation reveals hidden code quality issues and creates opportunities for strategic refactoring

⏱️ Reading Time: 11 minutes

One of the most overlooked benefits of working with AI coding tools is their unexpected ability to serve as automated code quality auditors. When AI consistently struggles in certain areas of your codebase, it’s not just a productivity problem—it’s a diagnostic signal pointing to deeper technical debt and structural issues that affect both AI and human developers.

This diagnostic capability transforms AI from merely a coding assistant into a quality assessment tool that can guide strategic refactoring decisions and long-term codebase improvement efforts.

Understanding AI as a Quality Indicator

The Core Insight

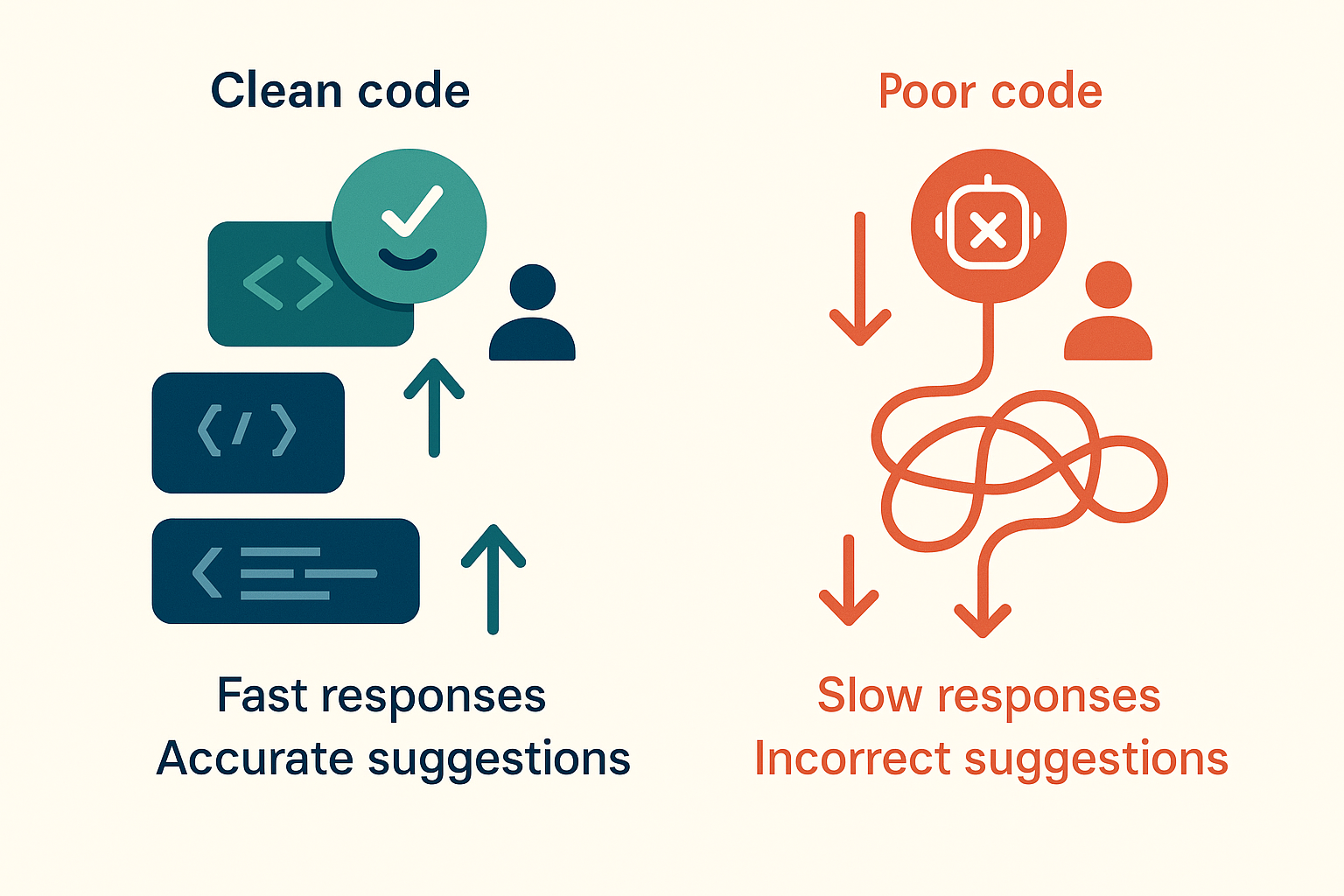

AI coding tools excel in clean, well-structured codebases but struggle dramatically in areas with poor code quality. This isn’t a limitation—it’s a feature. The same structural problems that confuse AI agents also confuse human developers, making AI performance a reliable proxy for code quality assessment.

When you consistently see AI underperform in specific areas, you’re witnessing automated feedback about code that likely violates fundamental software engineering principles.

Why AI Struggles Correlate with Code Quality

AI agents work best when they can:

- Understand clear relationships between components and dependencies

- Follow consistent patterns in naming, structure, and organization

- Predict logical next steps based on established architectural conventions

- Maintain context about what each piece of code is responsible for

Poor code quality disrupts all of these capabilities, creating the same challenges for AI that human developers experience when working in problematic areas.

Recognizing the Warning Signs

Primary AI Performance Indicators

Watch for these specific behaviors that signal potential code quality issues:

Incorrect Type Usage:

- Using wrong classes or interfaces that don’t match your codebase

- Confusing similar-looking but functionally different types

- Defaulting to generic types when specific ones are available

Property and Method Errors:

- Referencing properties that don’t exist on the specified objects

- Calling methods that aren’t available in the current context

- Making up methods that seem logical but don’t actually exist

Code Duplication:

- Creating functionality that already exists elsewhere in the codebase

- Reimplementing patterns instead of using established utilities

- Building new solutions instead of leveraging existing infrastructure

Scope Creep and Tangential Modifications:

- Modifying code in surrounding areas that wasn’t part of the original task

- “Cleaning up” or refactoring nearby code without being asked

- Making changes to unrelated functions or methods in the same file

- Attempting to fix perceived problems in adjacent code structures

Secondary Struggle Indicators

Excessive Prompting Effort: When you find yourself working unusually hard to prompt the AI for something that should be straightforward, the complexity often lies in the code structure rather than the task itself. In clean codebases, simple tasks require simple prompts.

Persistent Context Confusion: AI repeatedly loses track of what it’s working on or makes assumptions that don’t align with the actual codebase structure.

Pattern Recognition Failure: AI can’t identify or follow the established patterns in your code, suggesting those patterns may be inconsistent or poorly implemented.

Distinguishing Real Issues from AI Limitations

Not every AI struggle indicates code quality problems. Consider these factors:

Task Complexity: Inherently complex algorithms or business logic may challenge AI regardless of code quality.

Domain-Specific Knowledge: Highly specialized domain requirements may require extensive context that’s difficult for AI to process.

Legacy Constraints: Sometimes poor structure is unavoidable due to integration requirements with legacy systems.

Assessment Method: Use pattern recognition rather than timing measurements. Consistent struggle across multiple interactions in the same area is more reliable than isolated incidents or timing variations.

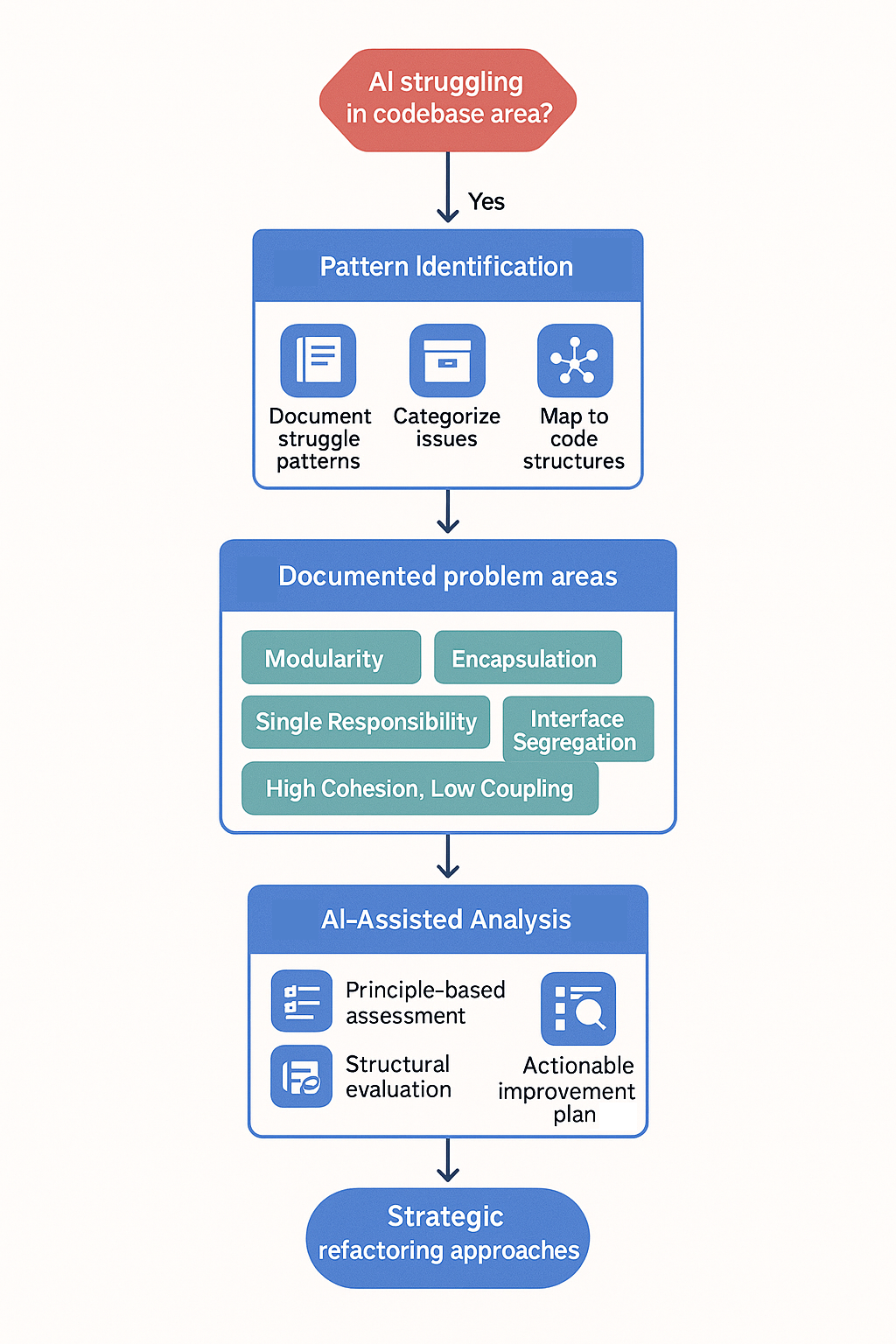

The Diagnostic Process

Step 1: Pattern Identification

When AI struggles become apparent, conduct a systematic assessment:

Document Struggle Patterns: Note specific areas where AI consistently underperforms across multiple sessions and tasks.

Categorize Issues: Group problems by type (incorrect types, missing methods, architectural confusion, etc.).

Map to Code Structures: Identify which files, classes, or modules correlate with AI performance problems.

Step 2: Software Principle Analysis

Evaluate problematic areas against core software engineering principles:

Modularity: Are responsibilities clearly separated into distinct, focused modules?

Encapsulation: Are internal details properly hidden and interfaces well-defined?

Single Responsibility: Does each class or function have a clear, singular purpose?

Interface Segregation: Are interfaces focused and clients not forced to depend on methods they don’t use?

KISS (Keep It Simple, Stupid): Is the code as simple as possible while still meet requirements?

YAGNI (You Aren’t Gonna Need It): Is the code free from premature optimization and unnecessary complexity?

High Cohesion, Low Coupling: Are related functionalities grouped together while minimizing dependencies between components?

Step 3: AI-Assisted Analysis

Leverage AI itself to diagnose the problems:

Principle-Based Assessment: Ask the AI to analyze the problematic area against the software engineering principles listed above.

Structural Evaluation: Have the AI identify specific violations of good design practices in the code.

Refactoring Plan Generation: Request a comprehensive plan for improving the code quality before any implementation begins.

Strategic Refactoring Approaches

Decision Framework: When to Refactor

Immediate Refactoring Indicators:

- Multiple team members report difficulty working in the area

- AI consistently fails to complete simple tasks in the code

- Bug frequency is higher than average in the problematic area

- New feature development is significantly slower in this section

Prioritization Factors:

- Frequency of interaction: Areas touched more often should be prioritized

- Impact scope: Problems affecting multiple features or components take precedence

- Development velocity impact: Areas that consistently slow down development work

- AI collaboration dependency: Sections where AI assistance is most valuable for future work

Two-Approach Strategy for Active Development

Approach 1: Quick Fix Then Refactor Use when you can quickly integrate your current work item into existing infrastructure:

- Complete your immediate work item with minimal integration to existing code

- Start fresh AI session for focused refactoring

- Implement improvements with dedicated attention to code quality

- Return to development with improved foundation

Approach 2: Refactor First Use when the current work item is too painful to complete in the existing structure:

- Stop current development work

- Start fresh AI session focused entirely on refactoring

- Implement structural improvements first

- Return to original work item with new AI session in cleaner codebase

The decision between approaches depends on the pain level of continuing with existing code versus the benefit of refactoring first.

AI-Assisted Refactoring Process

Planning Phase:

- Have AI create comprehensive refactoring plan before any code changes

- Review plan for alignment with team standards and architectural decisions

- Ensure plan addresses specific principle violations identified in diagnosis

- Set clear scope boundaries to prevent scope creep

Systematic planning is critical for refactoring work where scope can easily expand. Planning-First AI Methodology (19-minute read) provides frameworks for focused, manageable AI refactoring efforts.

Implementation Phase:

- Use fresh AI context dedicated to refactoring work

- Work through plan systematically with regular checkpoints

- Maintain focus on single responsibility and clean interfaces

- Test frequently to ensure functionality preservation

Validation Phase:

- Verify that AI can now work more effectively in the refactored code

- Test with simple tasks that previously caused struggle

- Document improvements for team learning and future reference

Team Integration and Process

Incorporating Diagnostics into Development Workflow

Code Review Integration: Include AI performance assessment as part of regular code review discussions. Ask: “How easy was this code to work with using AI tools?”

Sprint Planning Considerations: When planning AI-assisted development work, factor in potential refactoring needs for problematic areas.

Technical Debt Tracking: Integrate AI-identified quality issues into existing technical debt management systems with appropriate prioritization.

Time-Boxing and Budget Management

Realistic Time Allocation: AI-assisted refactoring typically takes hours rather than days, making it more feasible within sprint budgets.

Time-Boxing Strategy: Set maximum time limits (2-4 hours) for refactoring efforts. If AI can’t complete improvements within the time box, reassess scope or defer work.

Success Measurement: Start with qualitative indicators rather than quantitative metrics. Success means AI works more smoothly in the area without excessive prompting effort.

For comprehensive frameworks to systematically track and validate the ROI of AI-driven refactoring efforts, Measuring AI Impact in Development Teams (8-minute read) provides specific methodologies for documenting quality improvements, measuring developer experience enhancements, and building business cases that justify continued investment in AI-guided technical debt reduction initiatives.

Team Communication and Documentation

Sharing Discoveries: Document patterns of AI struggle and successful refactoring approaches for team learning.

Knowledge Transfer: Use AI-generated refactoring plans as documentation of structural improvement decisions.

Best Practice Development: Build team understanding of code structures that work well with AI collaboration.

Long-Term Benefits and Strategic Value

Compounding Productivity Gains

Immediate Benefits:

- Reduced prompting effort for AI collaboration

- Faster development velocity in refactored areas

- Fewer debugging cycles and integration issues

Long-Term Benefits:

- Easier codebase maintenance for all developers

- Improved testing and validation processes

- Better onboarding experience for new team members

- Enhanced system reliability and predictability

AI-Optimized Code Characteristics

AI tends to generate and work best with code that exhibits:

- Clear separation of concerns with well-defined interfaces

- Consistent naming conventions that reflect functionality

- Logical organization that follows predictable patterns

- Minimal dependencies between unrelated components

- Explicit rather than implicit relationships and behaviors

These characteristics align perfectly with established software engineering best practices, creating a virtuous cycle where AI-friendly code is also human-friendly code.

Strategic Codebase Evolution

Using AI as a quality diagnostic tool enables:

Proactive Technical Debt Management: Identify problems before they become critical blockers.

Data-Driven Refactoring Decisions: Use AI performance as objective input for prioritizing improvement efforts.

Continuous Quality Improvement: Regular assessment and improvement cycles that prevent technical debt accumulation.

Team Skill Development: Learning to recognize quality issues through AI collaboration patterns improves overall development skills.

Tools and Implementation Support

Assessment Documentation

Create systematic records of:

- Problem Area Inventory: Catalog of consistently problematic code sections

- Refactoring Success Stories: Document before/after AI performance improvements

- Pattern Recognition: Common quality issues and their solutions

- Time Investment Tracking: Refactoring effort versus long-term productivity gains

Team Training and Adoption

Skills Development:

- Train team members to recognize AI struggle patterns

- Develop shared vocabulary for discussing AI-code quality relationships

- Practice collaborative refactoring techniques using AI assistance

Process Integration:

- Establish clear procedures for escalating quality concerns

- Create decision frameworks for refactoring timing and scope

- Develop metrics for measuring long-term improvement impact

For comprehensive team adoption strategies and overcoming resistance to AI quality assessment practices, see Overcoming AI Resistance.

Conclusion: AI as Quality Partnership

Using AI performance as a code quality diagnostic tool represents a fundamental shift in how we think about technical debt management and codebase improvement. Instead of relying solely on human intuition or periodic audits, we can leverage AI collaboration patterns to identify problems early and address them systematically.

The key insight is that AI doesn’t just help us write code faster—it helps us write better code and identify areas where existing code could be improved. By paying attention to where AI struggles and treating those struggles as diagnostic information, development teams can make data-driven decisions about refactoring priorities and long-term codebase health.

This approach creates a positive feedback loop: better code quality enables more effective AI collaboration, which in turn makes it easier to maintain and improve code quality over time. The result is a codebase that works well for both AI and human developers, with reduced technical debt and improved long-term maintainability.

Remember that the goal isn’t perfection—it’s continuous improvement guided by practical feedback from AI collaboration. Start by documenting where AI struggles in your codebase, analyze those areas against core software engineering principles, and use AI itself to help plan and implement targeted improvements.

Your future self, your teammates, and your AI collaborators will all benefit from the cleaner, more maintainable code that emerges from this systematic approach to quality improvement.

Related Posts

Essential Reading:

- Planning-First AI Methodology Foundation - Systematic approaches that reveal code quality patterns

- Taming the Monolith: AI Legacy Code Strategies - Apply quality detective techniques to legacy systems

- Measuring AI Impact Beyond Gut Feeling - Quantify the business value of quality improvements

Dive Deeper:

- AI Interview Method for Scattered Thoughts - Extract quality insights through systematic questioning

- Multi-Phase Planning Documentation Bundles - Coordinate quality improvements across complex systems

Ready to start using AI as your code quality detective? Begin by documenting the areas where AI collaboration feels difficult, then ask your AI assistant to analyze those areas against software engineering principles—you might be surprised by what systematic quality issues it identifies.